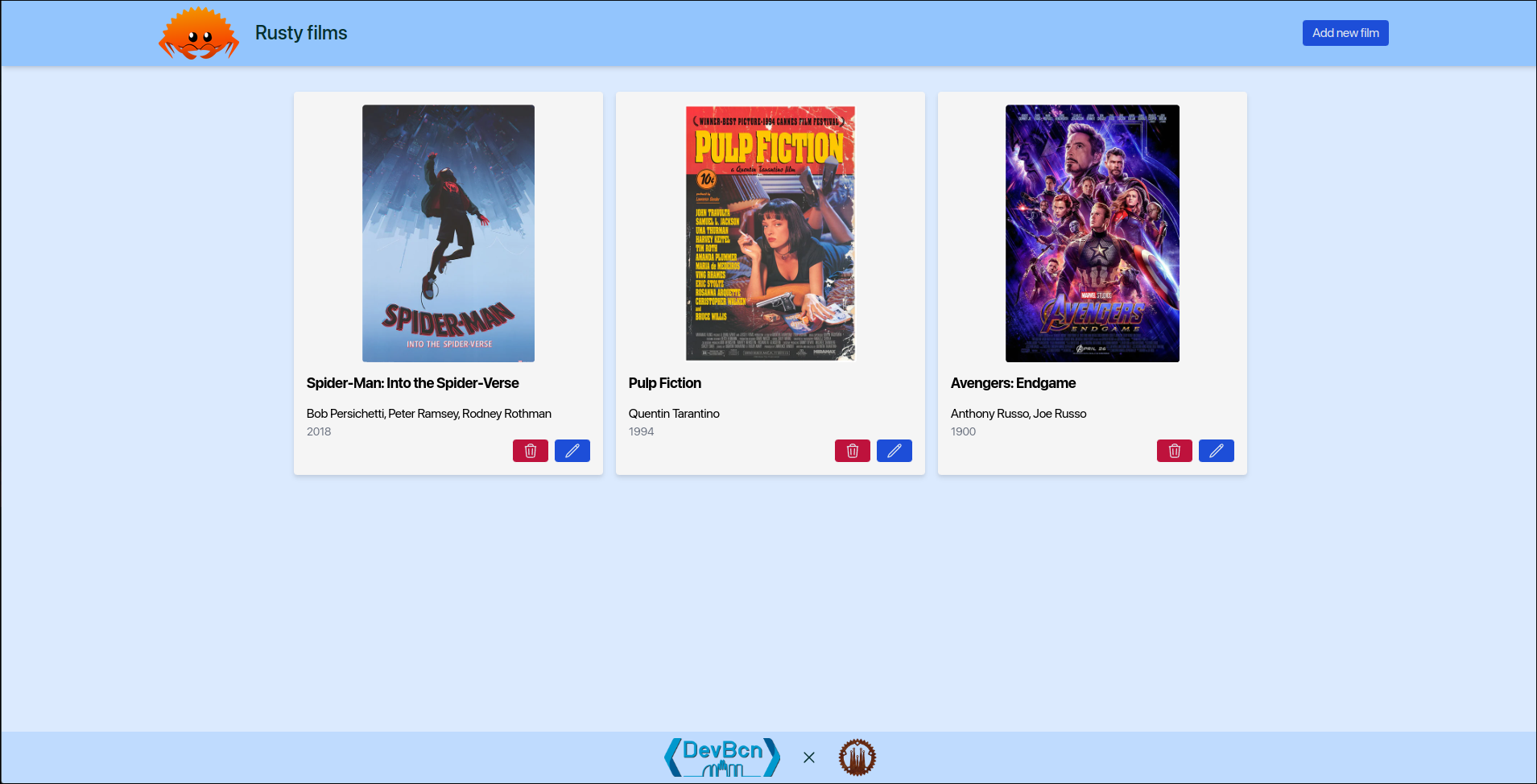

Building a Movie Collection Manager - Full Stack Workshop with Rust, Actix, SQLx, Dioxus, and Shuttle

Welcome to the this workshop! In this hands-on workshop, we will guide you through the process of building a full stack application using Rust for the API, Actix-Web as the web framework, SQLx for database connectivity, Dioxus for the front-end, and Shuttle for deployment. This workshop assumes that you have a basic understanding of Rust and its syntax.

Throughout the workshop, you will learn how to set up a Rust project with Actix-Web, implement CRUD operations for movies, establish database connectivity with PostgreSQL using SQLx, design a responsive front-end with Dioxus, and deploy the application to a hosting environment using Shuttle.

By the end of the workshop, you will have built a functional movie collection manager application. You will understand how to create APIs with Actix-Web, work with databases using SQLx, design and develop the front-end with Dioxus, and deploy the application using Shuttle. This workshop will provide you with practical experience and insights into building full stack applications with Rust.

Prerequisites:

- Basic knowledge of the Rust programming language

- Familiarity with HTML, CSS, and JavaScript is helpful but not required

Check the Prerequisites section of the workshop guide for more details.

Workshop Duration: 4,5 hours

Workshop schedule

1. Knowing the audience and installing everything

- Introduction to the workshop

- Installing Rust, Cargo, and other dependencies

2. Building the API with Actix-Web, SQLx and Shuttle

- Introduction to Shuttle, Actix-Web and its features

- Setting up and deploying an Actix-Web project

- Establishing database connectivity with SQLx

- Creating API endpoints for movie listing

- Implementing CRUD operations for movies

3. Designing the Front-End with Dioxus

- Introduction to Dioxus

- Setup and installation

- Components

- State management

- Event handling

- Building

The revised workshop schedule incorporates deployment with Shuttle, allowing participants to learn how to prepare and deploy the application to a hosting environment.

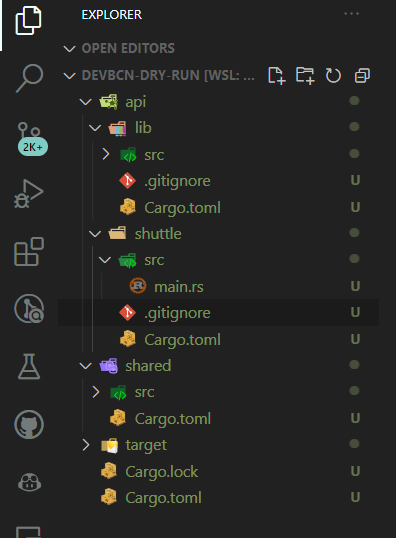

Repository Structure:

├── api # Rust API code

│ ├── lib # Actix-Web API code

│ └── shuttle # Shuttle project

├── front # Dioxus front-end code

├── shared # Common code shared between the API and the front-end

└── README.md # Workshop instructions and guidance

Resources:

- Rust: https://www.rust-lang.org/

- Actix-Web: https://actix.rs/

- SQLx: https://github.com/launchbadge/sqlx

- Dioxus: https://dioxuslabs.com/

- Shuttle: https://www.shuttle.rs/

We hope you enjoy the workshop and gain valuable insights into building full stack applications with Rust, Actix, SQLx, Dioxus, and Shuttle. If you have any questions or need assistance, please don't hesitate to ask during the workshop. Happy coding!

Prerequisites

In order to start the workshop there are a few things that we will have to install or set up.

Rust

If you don't have Rust installed in your machine yet, please follow these instructions.

Visual Studio Code

You can use whatever IDE you want but we're going to use Visual Studio Code as our code editor.

If you're going to use Visual Studio Code as well, please install the following extensions:

Shuttle

This is the tool and platform that we're going to use to deploy our backend (api & database).

You can follow this installation guide or just do:

cargo install cargo-shuttle

Dioxus

Dioxus is the framework that we're going to use to build our frontend.

Be sure to install the Dioxus CLI:

cargo install dioxus-cli

After that, make sure the wasm32-unknown-unknown target for Rust is installed:

rustup target add wasm32-unknown-unknown

Docker

We will also need to have Docker installed in order to deploy locally while we're developing the backend.

DBeaver

We will use DBeaver to connect to the database and run queries. Feel free to use any other tool that you prefer.

cargo-watch

We will also use cargo-watch to automatically recompile our backend when we make changes to the code.

cargo install cargo-watch

cargo-make

Finally, let's install cargo-make:

cargo install cargo-make

We're going to leverage cargo-make to run all the commands that we need to run in order to build and deploy our backend and frontend.

Backend

The goal of this part of the workshop is to create a simple API that will be used by the front-end.

Tools and Frameworks

Take the time to read the documentation of each of these tools and frameworks to learn more.

Guide

If you get lost during the workshop, you can always refer to:

- the workshop conductors

- the workshop GitHub repository which contains the final code with tests, mocks, memory database, CI/CD, etc.

- the dry-run workshop GitHub repository: each commit corresponds to a step of the workshop. You will see that some sections will instruct you to commit your code. You can always refer to this repository to see what the code should look like at that point.

Workspace Setup

Let's start by creating a new workspace for our project.

You can learn more about workspaces in the Rust Book

The basic idea is that we will create a monorepo with different crates that will be compiled together.

Remember that our project will have this structure:

├── api # Rust API code

│ ├── lib # Actix-Web API code

│ └── shuttle # Shuttle project

├── front # Dioxus front-end code

├── shared # Common code shared between the API and the front-end

└── README.md # Workshop instructions and guidance

Creating the workspace

Create a new folder for the project and initialize a new workspace by creating a Cargo.toml file with the following content:

[workspace]

members = [

"api/lib",

"api/shuttle",

"shared"

]

resolver = "2"

We will add the front crate later, don't worry.

Initializing the repository

Let's initialize a new git repository for our project.

git init

Creating the crates

Now that we have a workspace, we can create the crates that will be part of it.

For the API, we will create two crates:

lib: Library containing the code for the API.shuttle: Executable for the shuttle project.

Having two different crates is totally optional, but it will allow us to have a cleaner project structure and will make it easy to reuse the API library code if we decide to not use Shuttle in the future.

Shuttle will allow us to run our API locally and deploy it to the cloud with minimal effort but it is not required to build the API.

We could decide to use a different executable to run our API that would use the lib crate as a dependency. For instance, we could use Actix Web directly to create such a binary and release it as a Docker image.

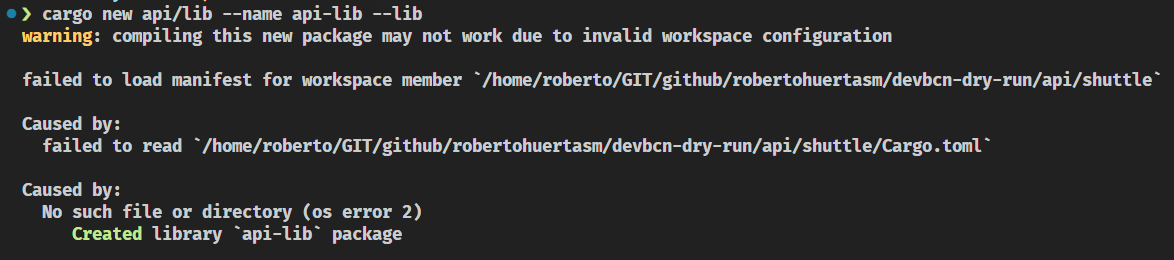

Creating the lib crate

Let's create the lib crate by running the following command:

cargo new api/lib --name api-lib --lib --vcs none

Note that we are using the --lib flag to create a library crate. If you forget to add this flag, you will have to manually change the Cargo.toml file to make it a library crate.

The vsc flag is used in this case to tell cargo to not initialize a new git repository. Remember that we already did that in the previous step.

Creating the shuttle crate

Let's create the shuttle crate by running the following command:

cargo shuttle init api/shuttle -t actix-web --name api-shuttle

Creating the shared crate

Finally, let's create the shared crate by running the following command:

cargo new shared --lib

Note we're not using the --name flag this time. This is because the name of the crate will be inferred from the name of the folder.

Buidling the project

Let's build the project to make sure everything is working as expected.

cargo build

You should see something like this:

As you can see, a new target folder has been created. This folder contains the compiled code for all the crates in the workspace and that's why we're seeing so many objects to be committed.

The target folder should be ignored by git.

Let's create a .gitignore file in the root of our repo and add the following content:

target/

Secrets*.toml

Aside from that, remove all the .gitignore files from the crates as they are not needed anymore.

This is how it should look like:

Committing the changes

If you have arrived here, you can commit your changes.

git add .

git commit -m "Initial commit"

Exploring Shuttle

Open the api/shuttle folder and look for the src/main.rs file. This is the entry point of our application.

You'll see something like this:

#![allow(unused)] fn main() { use actix_web::{get, web::ServiceConfig}; use shuttle_actix_web::ShuttleActixWeb; #[get("/")] async fn hello_world() -> &'static str { "Hello World!" } #[shuttle_runtime::main] async fn actix_web( ) -> ShuttleActixWeb<impl FnOnce(&mut ServiceConfig) + Send + Clone + 'static> { let config = move |cfg: &mut ServiceConfig| { cfg.service(hello_world); }; Ok(config.into()) } }

Shuttle has generated a simple hello-world Actix Web application for us.

As you can see, it's pretty straight-forward.

The actix_web function is the entry point of our application. It returns a ShuttleActixWeb instance that will be used by Shuttle to run our application.

In this function, we're going to configure our different routes. In this case, we only have one route: /, which is mapped to the hello_world function.

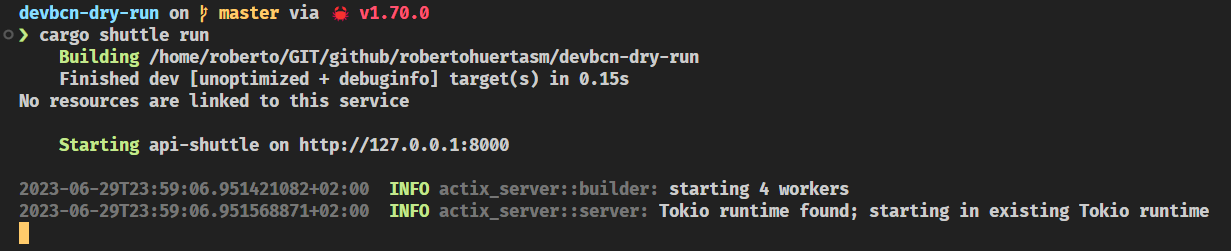

Let's run it!

In the root of the project, run the following command:

cargo shuttle run

You should see something like this:

Now curl the / route:

curl localhost:8000

Or open it in your browser.

Hopefully, you should see a greeting in your screen!

And that's how easy it is to create a simple API with Shuttle!

Try to add more routes and see what happens!

We're using Actix Web as our web framework, but you can use any other framework supported by Shuttle.

Check out the Shuttle documentation to learn more. Browse through the Examples section to see how to use Shuttle with other frameworks.

At the moment of writing this, Shuttle supports:

Deploying with Shuttle

So far so good. We have a working API and we can run it locally. Now, let's deploy it to the cloud and see how easy it is to do so with Shuttle.

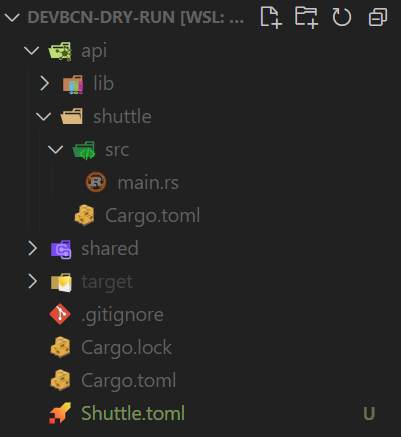

Shuttle.toml file

Shuttle will use the name of the workspace directory as the name of the project.

As we don't want to collide with other people having named the folder in a similar way, we will use a Shuttle.toml file to override the name of the project.

Go to the root of your workspace and create a Shuttle.toml file with the following content:

name = "name_you_want"

Your directory structure should look like this:

Commit the changes to your repository.

git add .

git commit -m "add Shuttle.toml file"

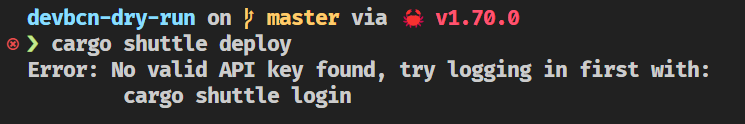

Deploying to the cloud

Now that we have a Shuttle.toml file, we can deploy our API to the cloud. To do so, run the following command:

cargo shuttle deploy

You should get an error message similar to this one:

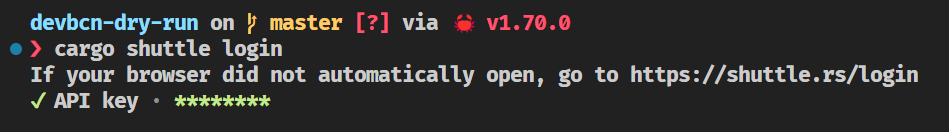

Login to Shuttle

Let's do what the previous message suggests and run cargo shuttle login.

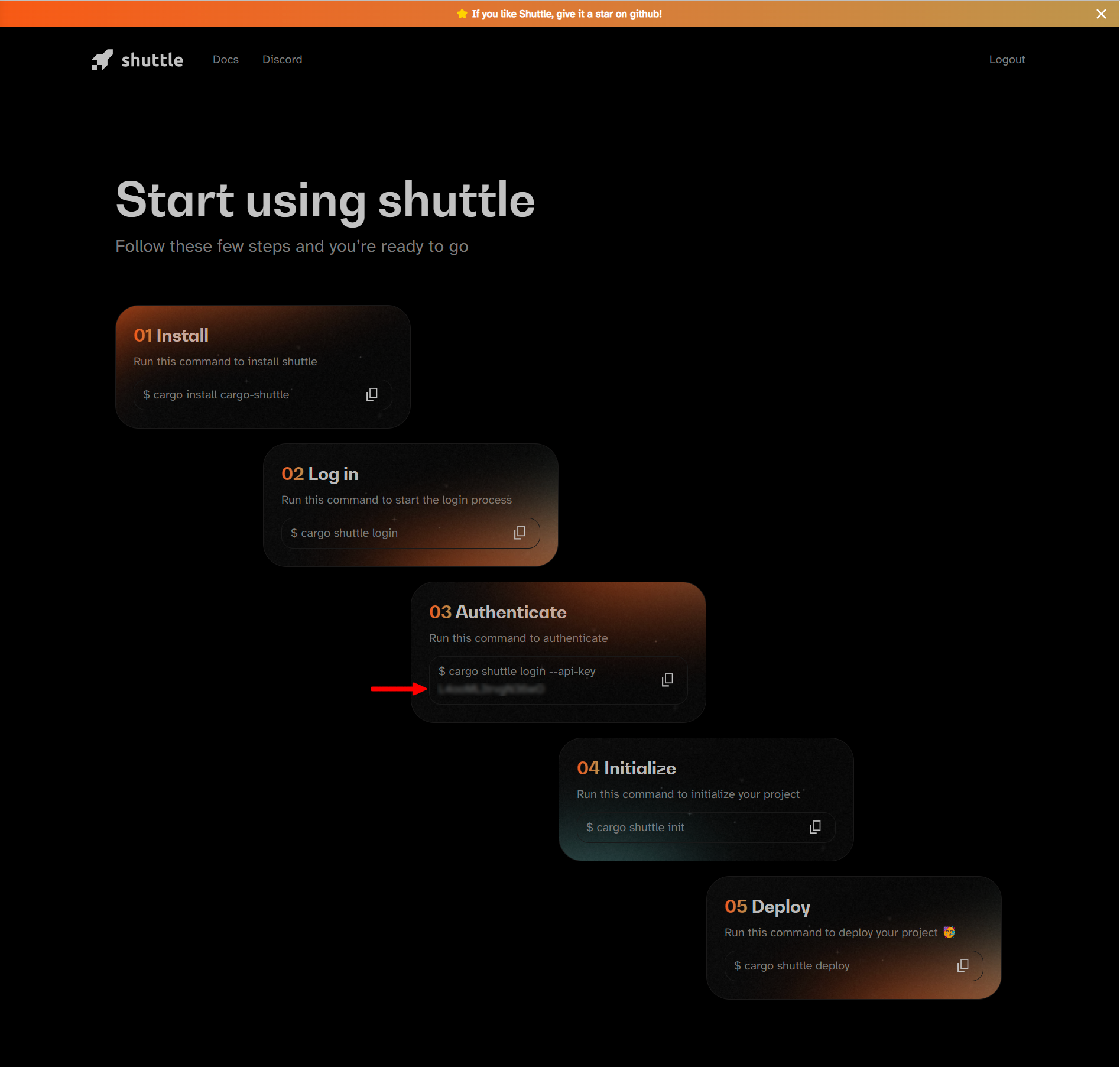

Take into account that you will need to have a GitHub account to be able to login.

The moment you run the cargo shuttle login command, you will be redirected to a Shuttle page like this so you can authorize Shuttle to access your GitHub account.

In your terminal, you should see something like this:

Continue the login process in your browser and copy the code you get in the section 03 of the Shuttle page.

Then paste the code in your terminal and press enter.

Let's deploy!

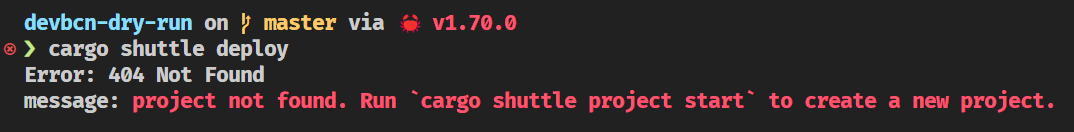

Now that we have logged in, we can deploy our API to the cloud. To do so, run the following command:

cargo shuttle deploy

Oh no! We got another error message:

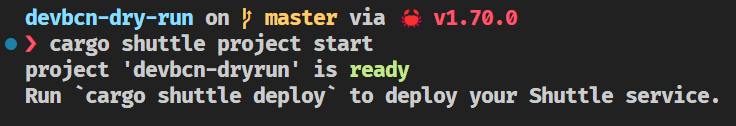

The problem is that we haven't created the project environment yet. Let's do that now.

cargo shuttle project start

If everything went well, you should see something like this:

Now, let's finally deploy our API to the cloud by running the following command again:

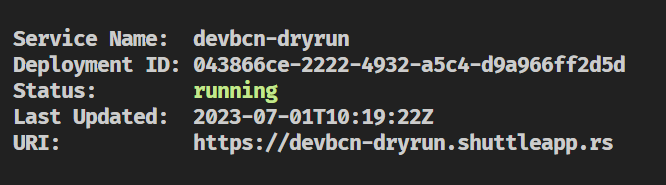

cargo shuttle deploy

You should see in your terminal how everything is being deployed and compiled in the Shuttle cloud. This can take a while, so be patient and wait for a message like the one below:

Browse to the URI shown in the message or curl it to see the result:

curl https://<your_project_name>.shuttleapp.rs

Hello world! Easy, right?

We have deployed our API to the cloud!

The URI of your project is predictable and will always conform to this convention: https://<your_project_name>.shuttleapp.rs.

Shuttle CLI and Console

CLI

Shuttle provides a CLI that we can use to interact with our project. We already have used it to create the project and to deploy it to the cloud.

Let's take a look at the available commands:

cargo shuttle --help

You can also get more information by exploring the Shuttle CLI documentation.

Interesting commands

Let's take a look at some of the commands that we will use the most.

cargo shuttle deploy: Deploy the project to the cloud.cargo shuttle logs: Display the logs of a deployment.cargo shuttle status: Display the status of the service.cargo shuttle project status: Display the status of the project.cargo shuttle project list: Display a list of projects and their current status.cargo shuttle project restart: Restart a project. Useful when you need to upgrade the version of your Shuttle dependencies.cargo shuttle resource list: Display a list of resources and their current status. Useful to see connection strings and other information about the resources used by the project.

Console

Shuttle also provides a Console that we can use to interact with our project.

It's still in the early days but it already provides some interesting features. For instance, we can use it to see the logs of our project.

Working with a Database

For our project we will use a PostgreSQL database.

You may be already thinking about how to provision that database both locally and in the cloud, and the amount of work that it will take to do so. But no worries, we will use Shuttle to do that for us.

Using Shuttle Shared Databases

Open this link to the Shuttle Docs and follow the instructions to create a shared database in AWS.

As you will be able to see, just by using a macro we will be able to get a database connection injected into our code and a database fully provisioned both locally and in the cloud.

So let's get started!

Adding the dependencies

Go to the Cargo.toml file in the api > shuttle folder and add the following dependencies to the ones you already have:

[dependencies]

...

# database

shuttle-shared-db = { version = "0.47.0", features = ["postgres", "sqlx"] }

sqlx = { version = "0.7", default-features = false, features = [

"tls-native-tls",

"macros",

"postgres",

"uuid",

"chrono",

"json",

] }

If you want to learn more about how to add dependencies to your Cargo.toml file, please refer to the Cargo Docs.

We are adding the shuttle-shared-db dependency to get the database connection injected into our code and the SQLx dependency to be able to use the database connection.

Note that the SQLx dependency has a lot of features enabled. We will use them later on in the project.

If you want to learn more about features in Rust, please refer to the Cargo Docs.

Injecting the database connection

Now that we have the dependencies, we need to inject the database connection into our code.

Open the main.rs file in the api > shuttle > src folder and add the following code as the first parameter of the actix_web function:

#![allow(unused)] fn main() { #[shuttle_shared_db::Postgres] pool: sqlx::PgPool, }

The function should look like this:

#![allow(unused)] fn main() { #[shuttle_runtime::main] async fn actix_web( #[shuttle_shared_db::Postgres] pool: sqlx::PgPool, ) -> ShuttleActixWeb<impl FnOnce(&mut ServiceConfig) + Send + Clone + 'static> { let config = move |cfg: &mut ServiceConfig| { cfg.service(hello_world); }; Ok(config.into()) } }

Let's build the project. We will get a warning because we're not using the pool variable yet, but we will fix that in a moment.

cargo build

Running the project

Now that we have the database connection injected into our code, we can run the project and see what happens.

cargo shuttle run

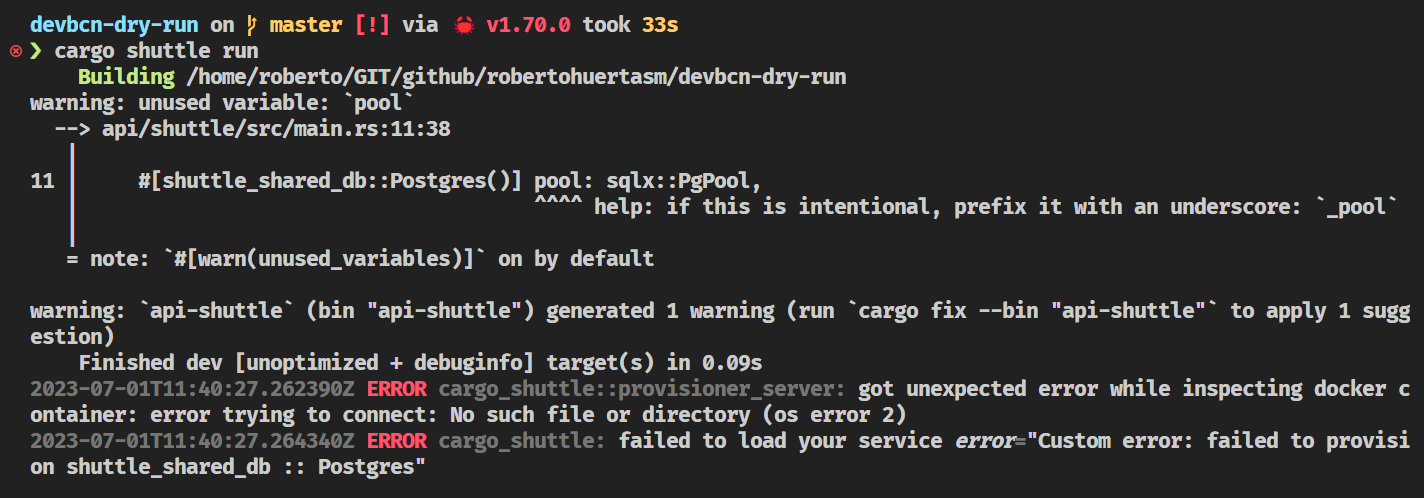

You will see that the project is building and then it will fail with the following error:

Docker

The error is telling us that we need to have Docker running in our system.

Let's start Docker and run the project again.

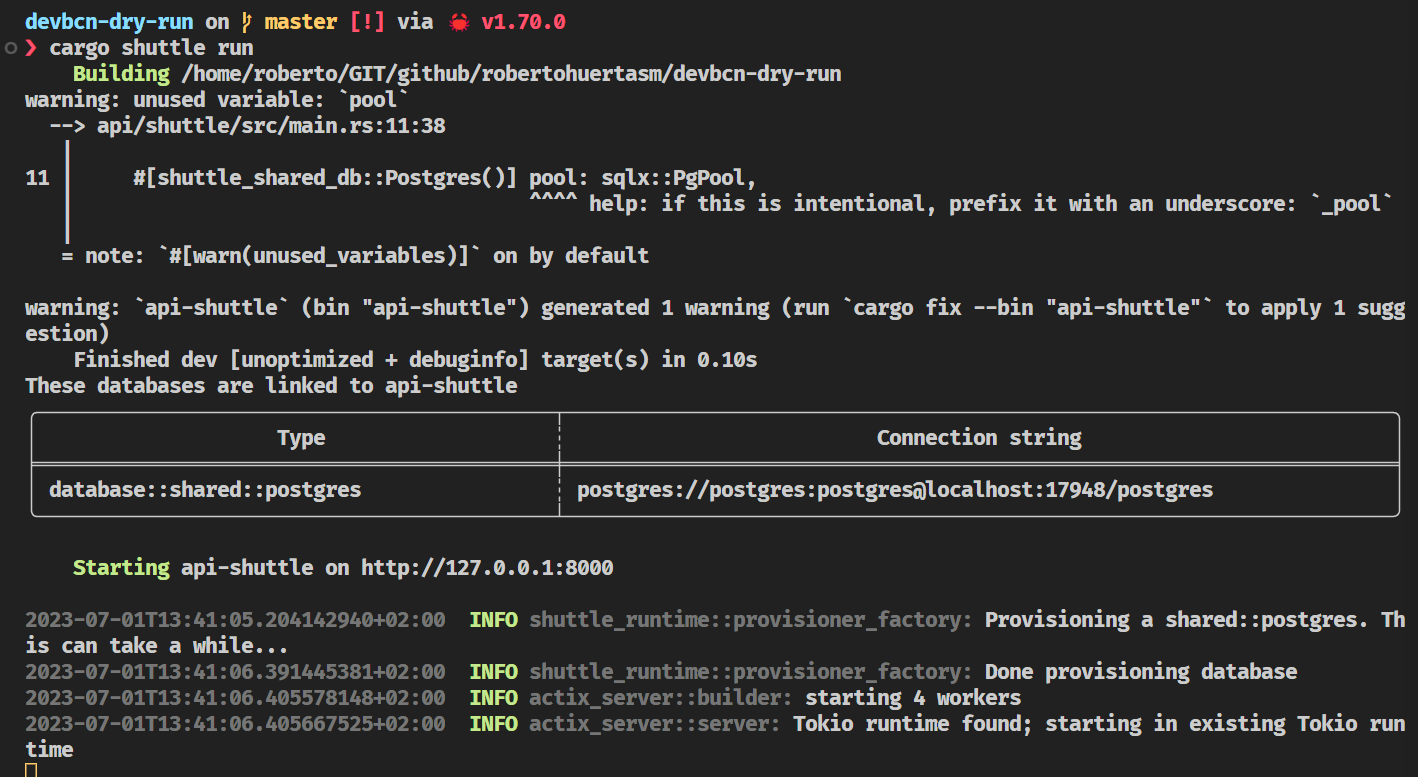

cargo shuttle run

This time the project will build and run successfully.

Note that you will be able to find the connection string to the database in the logs. We will use that connection string later on in the project.

Commit your changes.

git add .

git commit -m "add database connection"

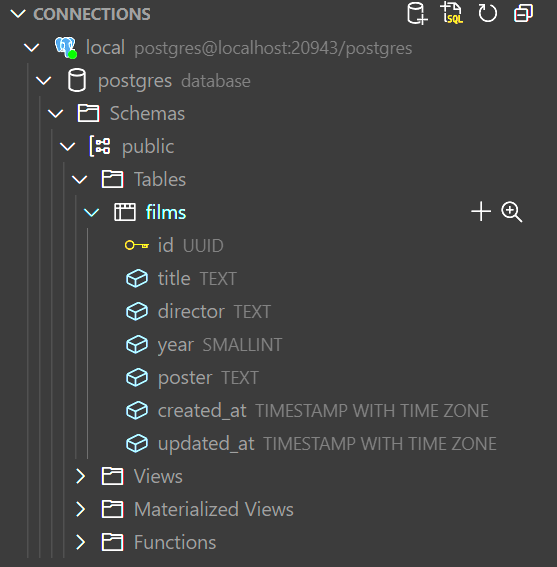

Setting up the Database

In this section we will setup the database for our project.

This is going to be a very simple CRUD application, so we will only need one table for our movies.

Creating the initial script

There are many ways to work with a database. We could use the SQLx CLI or Refinery to create and manage our database migrations, but as this is out of the scope of this workshop, we will create a simple script that will create the table for us.

Create a new file api/db/schema.sql with the following content:

CREATE EXTENSION IF NOT EXISTS "uuid-ossp";

CREATE TABLE IF NOT EXISTS films

(

id uuid DEFAULT uuid_generate_v1() NOT NULL CONSTRAINT films_pkey PRIMARY KEY,

title text NOT NULL,

director text NOT NULL,

year smallint NOT NULL,

poster text NOT NULL,

created_at timestamp with time zone default CURRENT_TIMESTAMP,

updated_at timestamp with time zone

);

You can see that this script will create a table called films only if that table does not exist yet.

Executing the initial script

Now that we have the script, we need to execute it.

Open the main.rs file in the api > shuttle > src folder and add the following code as the first line in the body of the actix_web function:

#![allow(unused)] fn main() { // initialize the database if not already initialized pool.execute(include_str!("../../db/schema.sql")) .await .map_err(CustomError::new)?; }

Add the following imports to the top of the file:

#![allow(unused)] fn main() { use shuttle_runtime::CustomError; use sqlx::Executor; }

Be sure that the path to the schema.sql file is correct. Try changing the path to something else and see what happens when you try to compile the project: cargo build.

Running the project

Let's run the project again and see if the database is created as expected.

cargo shuttle run

If you check your database, you should see that the films table has been created:

Commit your changes.

git add .

git commit -m "setup database"

Connecting to the Database

Now that we have everything we need, let's start by doing a simple endpoint that will get the database version.

This will help us to get acquainted with SQLx.

Creating the endpoint

Can you create the endpoint yourself?

Don't worry about how to retrieve the information from the database, we will do that in a moment. Just focus on creating and endpoint that will return a string. The string can be anything you want and the route should be /version.

If you're not sure about how to do it, expand the section below to see the solution.

Solution

Solution

Open the main.rs file in the api > shuttle > src folder and add the following code:

#![allow(unused)] fn main() { #[get("/version")] async fn version() -> &'static str { "version 0.0.0" } }

Setting up the endpoint

You may have noticed that if you run the project and go to the /version route, you will get a 404 error.

curl -i http://localhost:8000/version # HTTP/1.1 404 Not Found

This is because we haven't set up the endpoint yet.

Can you guess how to do it?

Solution

Solution

Add this to the line containing this piece of code cfg.service(hello_world); in the main.rs file in the api > shuttle > src folder: .service(version).

The line should look like this

#![allow(unused)] fn main() { let config = move |cfg: &mut ServiceConfig| { // NOTE: this is the modified line cfg.service(hello_world).service(version); }; }

Now, let's try it again:

curl -i http://localhost:8000/version # HTTP/1.1 200 OK version 0.0.0

Did it work? If so, congratulations! You have just created your first endpoint.

Connecting to the database

Now that we have the endpoint, let's connect to the database and retrieve the version.

For that we will need to do a couple of things:

- Pass the database connection pool to the endpoint.

- Execute a query in the endpoint and return the result.

Passing the database connection pool to the endpoint

In order to pass the connection pool to the endpoints we're going to leverage the Application State Extractor from Actix Web.

You can learn more about how to handle the state in Actix Web in the official documentation.

Ok, so just after the line where we initialized our database, let's add the following code:

#![allow(unused)] fn main() { let pool = actix_web::web::Data::new(pool); }

You may have noticed that we're using the same name for the variable that holds the connection pool and the one that holds the data. This is called variable shadowing.

Now, we need to change again the line we changed before when we added a new endpoint and use the .app_data method like this:

#![allow(unused)] fn main() { let config = move |cfg: &mut ServiceConfig| { cfg.app_data(pool).service(hello_world).service(version); }; }

Executing a query in the endpoint and returning the result

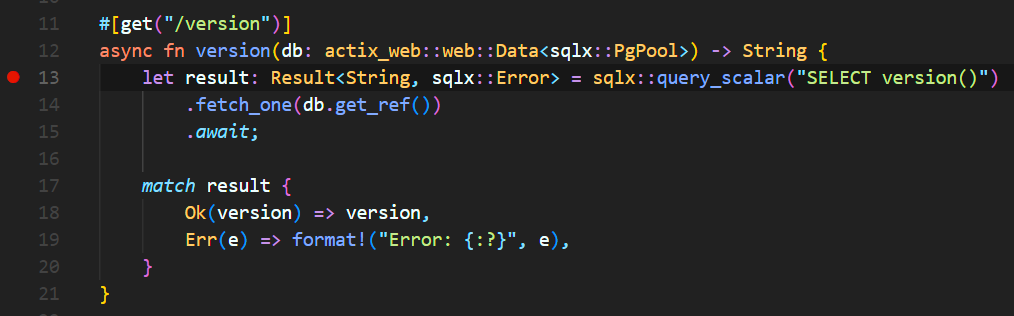

Now let's change our version endpoint so we can get the connection pool from the state and execute a query. If you have taken a look to the Application State Extractor documentation it should be pretty straightforward.

We have to add a parameter to the version function that will be our access to the database connection pool. We will call it db and it will be of type actix_web::web::Data<sqlx::PgPool>.

#![allow(unused)] fn main() { #[get("/version")] async fn version(db: actix_web::web::Data<sqlx::PgPool>) -> &'static str { "version 0.0.0" } }

Now, we need to execute a query. For that, we will use the sqlx::query_scalar function.

Let's change the version function to this:

#![allow(unused)] fn main() { #[get("/version")] async fn version(db: actix_web::web::Data<sqlx::PgPool>) -> String { let result: Result<String, sqlx::Error> = sqlx::query_scalar("SELECT version()") .fetch_one(db.get_ref()) .await; match result { Ok(version) => version, Err(e) => format!("Error: {:?}", e), } } }

There are a couple of things going on here, so let's break it down.

First of all, it's worth noticing that we changed the return type of the function to String. This is for two different reasons:

- We don't want our endpoint to fail. If the query fails, we will have to return an error message as a

String. - We need that return to be a

Stringbecause the version of the database will come to us as aString.

On the other hand, we have the sqlx::query_scalar function. This function will execute a query and return a single value. In our case, the version of the database.

As you can see, the query is pretty simple. We're just selecting the version of the database. The most interesting part in there is that we need to use the .get_ref() method to get a reference to the inner sqlx::PgPool from the actix_web::web::Data<sqlx::PgPool>.

Finally, we have the match expression. The sqlx::query_scalar function will return a Result with either the version of the database or an error. With the match expression we're covering both cases and we will make sure that we will always return a String.

Note that most of the time we don't need the return keyword in Rust. The last expression in a function will be the return value.

Introduce an error in the query and see what happens. Take some time to check out how the format macro works.

Note that even if you introduce an error in the query, the endpoint will not fail or even return a 500 error. This is because we're handling the error in the match expression.

We will see later how to handle errors in a more elegant way.

For now, let's commit our changes:

git add .

git commit -m "add version endpoint"

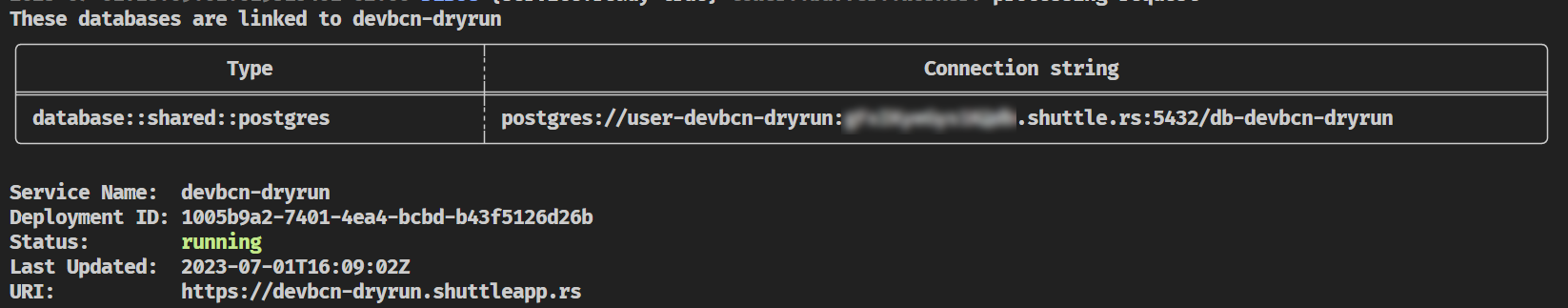

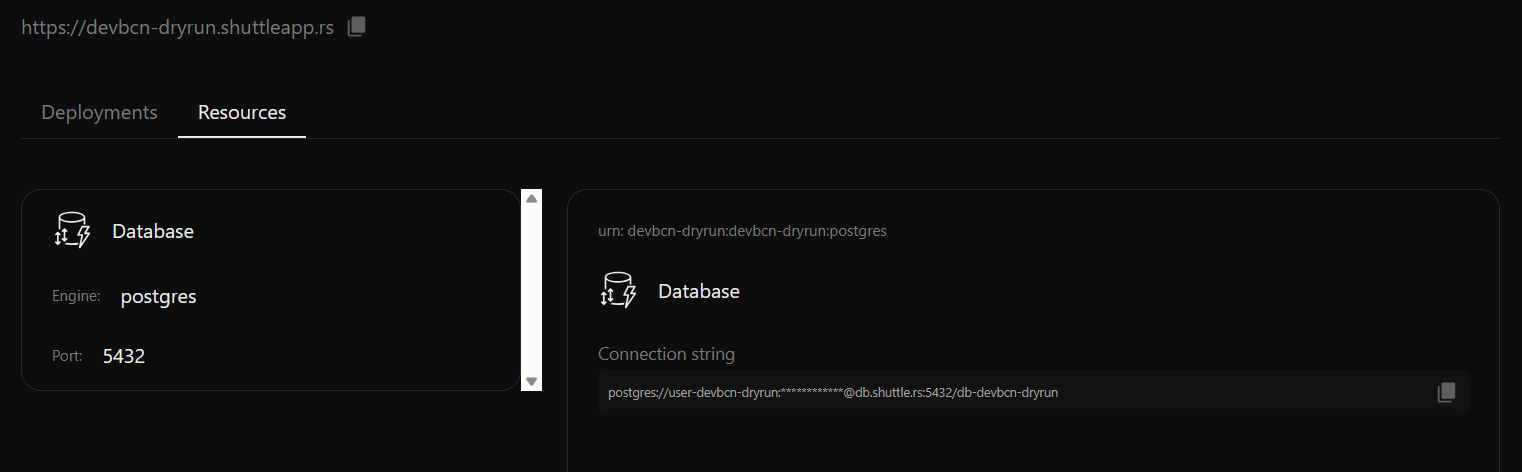

Deploying the Database

By now, this should be a familiar process. We'll use the same shuttle command we used to deploy the backend to deploy the database.

cargo shuttle deploy

As you've seen, we don't need to do anything special to deploy the database. Shuttle will detect that we have a database dependency in our code and will provision it for us. Neat, isn't it?

While the deployment takes place, you can take a look at this blog post to learn more about the concept of Infrastructure From Code.

Once the deployment is complete, you can check the database connection string in the terminal.

Don't worry if you missed it. You can always check the database connection string in the terminal by running the following command.

cargo shuttle resource list

You can also go to the Shuttle Console and check the database connection string there.

Testing the new endpoint

curl -i https://your-project-name.shuttleapp.rs/version

You should get a response similar to the following.

HTTP/1.1 200 OK

content-length: 115

content-type: text/plain; charset=utf-8

date: Sat, 01 Jul 2023 16:27:07 GMT

server: shuttle.rs

PostgreSQL 14.8 (Debian 14.8-1.pgdg120+1) on x86_64-pc-linux-gnu, compiled by gcc (Debian 12.2.0-14) 12.2.0, 64-bit

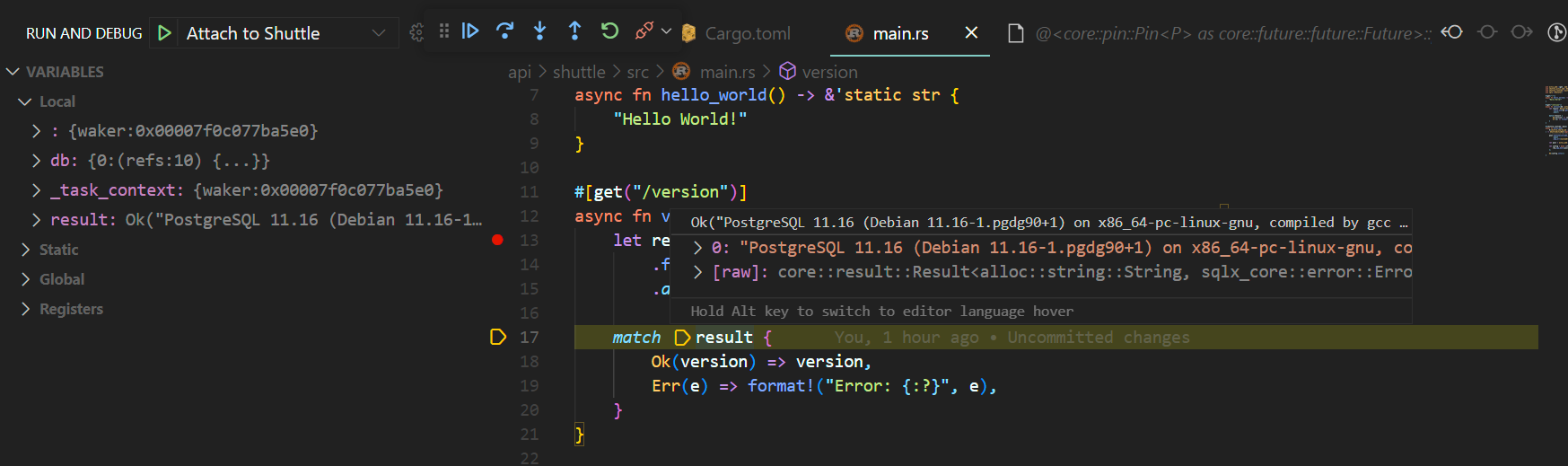

Debugging

In the section we are going to cover how to debug the backend using Visual Studio Code.

Make sure that you have installed these two extensions:

Once you have them installed, create a new file in the root of the project called .vscode/launch.json with the following content:

{

// Use IntelliSense to learn about possible attributes.

// Hover to view descriptions of existing attributes.

// For more information, visit: https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"type": "lldb",

"request": "attach",

"name": "Attach to Shuttle",

"program": "${workspaceFolder}/target/debug/api-shuttle"

}

]

}

The most important point to take into account here is that the program attribute must point to the binary that you want to debug.

So, in order to test that this is working, let's put a breakpoint in our version endpoint:

Now, run the project with cargo shuttle run and then press F5 to start debugging.

curl the version endpoint:

curl -i https://localhost:8000/version

Commit your changes:

git add .

git commit -m "add debugging configuration"

Instrumentation

In order to instrument our backend, we are going to use the tracing crate.

Let's add this dependency to the Cargo.toml file of our Shuttle crate:

tracing = "0.1"

Now, let's add some instrumentation to our main.rs file. Feel free to add as many logs as you want. For example:

#![allow(unused)] fn main() { #[get("/version")] async fn version(db: actix_web::web::Data<sqlx::PgPool>) -> String { // NOTE: added line below: tracing::info!("Getting version"); let result: Result<String, sqlx::Error> = sqlx::query_scalar("SELECT version()") .fetch_one(db.get_ref()) .await; match result { Ok(version) => version, Err(e) => format!("Error: {:?}", e), } } }

If you run the application now, and hit the version endpoint, you should see something like this in the logs of your terminal:

2023-07-01T19:47:32.836809924+02:00 INFO api_shuttle: Getting version

Log level

By default, the log level is set to info. This means that all logs with a level of info or higher will be displayed. If you want to change the log level, you can do so by setting the RUST_LOG environment variable. For example, if you want to see all logs, you can set the log level to trace:

RUST_LOG=trace cargo shuttle run

For more information about Telemetry and Shuttle, please refer to the Shuttle documentation.

Let's commit our changes:

git add .

git commit -m "added instrumentation"

Watch Mode

You may be thinking that it would be nice to have a way to automatically restart the backend when you make changes to the code.

Well, you're in luck! Enter cargo-watch.

If you don't have it already installed, you can do so by running:

cargo install cargo-watch

Next, in order to start the backend in watch mode, you can run:

cargo watch -x "shuttle run"

This will start the backend in watch mode. Now, whenever you make changes to the code, the backend will automatically restart.

Moving our endpoints to a library

The idea behind this section is to move our endpoints to a library so that we can reuse them in case we want to provide a different binary that doesn't rely on Shuttle.

Imagine, for example, that you want to deploy your API to your cloud of choice. Most probably you'll want to use a container to do so. In that case, having our endpoints in a library will allow us to create a binary that works purely on Actix Web.

Adding a local dependency

Remember that we already created a lib crate in one of the previous sections? Well, we are going to use that crate to move our endpoints there.

But first of all, we need to add a dependency to our api-shuttle Cargo.toml file. We can do so by adding the following line:

[dependencies]

...

api-lib = { path = "../lib" }

api-lib is the name we gave to that library (you can check that in the Cargo.toml file in the api > lib folder).

Compile and check that you don't receive any compiler error:

# just compile

cargo build

# or compile in watch mode

cargo watch -x build

# or run the binary

cargo shuttle run

# or run the binary in watch mode

cargo watch -x "shuttle run"

As you can see, adding a local dependency is trivial. You just need to specify the path to the library.

Moving the endpoints

Open the api > lib > src folder and create a new file called health.rs. This file will contain just one endpoint that will be used to check the health of the API, but for the sake of the example, we are going to temporary move our previous endpoints here.

Copy the following code in api > shuttle > src > main.rs to our recently created file health.rs:

#![allow(unused)] fn main() { #[get("/")] async fn hello_world() -> &'static str { "Hello World!" } #[get("/version")] async fn version(db: actix_web::web::Data<sqlx::PgPool>) -> String { tracing::info!("Getting version"); let result: Result<String, sqlx::Error> = sqlx::query_scalar("SELECT version()") .fetch_one(db.get_ref()) .await; match result { Ok(version) => version, Err(e) => format!("Error: {:?}", e), } } }

If you are in watch mode or you try to compile, you will see that you don't get any kind of error. That's because the code in health.rs is not being used yet.

Let's use it now. Open the api > lib > src > lib.rs file, remove all the content, and add the following line at the top of the file:

#![allow(unused)] fn main() { pub mod health; }

A couple of things to take into account here:

lib.rsfiles are the default entrypoint for library crates.- The line we introduced in the

lib.rsfile is doing two things.- First of all, it is declaring a new module called

health(hence the compiler will care about ourhealth.rsfile's content). - Secondly, it is making that module public. This means that we can access everything that we export from that module.

- First of all, it is declaring a new module called

Now, if you compile, you should be getting errors from the compiler complaining about dependencies. Let's add them to the Cargo.toml file:

[dependencies]

# actix

actix-web = "4.9.0"

# database

sqlx = { version = "0.7", default-features = false, features = [

"tls-native-tls",

"macros",

"postgres",

"uuid",

"chrono",

"json",

] }

# tracing

tracing = "0.1"

We will be adding more dependencies in the future, but for now, this is enough.

Finally, to make the compiler happy, let's import this in the top of the health.rs file:

#![allow(unused)] fn main() { use actix_web::get; }

Everything should compile by now.

Note that we're not using any Shuttle dependency in this crate.

Using the endpoints

Now that we have our endpoints in a library, we can use them in our main.rs file. Let's do that.

Open the api > shuttle > src > main.rs file and remove the endpoints code that we copied before. Get rid of the unused use statements as well.

Do you know what to do next?

Solution

Solution

Yes, you only have to import the endpoints from the library. It's a one-liner:

#![allow(unused)] fn main() { use api_lib::health::{hello_world, version}; }

Is it working? It should!

If you want to try out the endpoints without using Shuttle, you can create a new binary crate in the api folder and use the endpoints there. Check the Actix Web documentation for more information.

This is out of the scope of this workshop because of time constraints but feel free to explore that option. You can also take a look at the workshop's GitHub repository to see how to do it.

Commit your changes:

git add .

git commit -m "move endpoints to a library"

Creating a health check endpoint

We're going to get rid of the previous endpoints and create a health check endpoint. This endpoint will be used to check if the application is running and ready to receive requests.

This endpoint will be very basic and will just return a 200 OK response with custom header containing the version (this is just for fun, not really needed at all).

Armed with the knowledge we've gained so far, we should be able to handle this change.

- The route should be

/healthand use theGETmethod. - The response should be a

200 OKwith a custom header namedversioncontaining the version (a&strcontaining "v0.0.1" for example). - As a hint, you can check the code in Actix Web docs to see how to return a simple

200 OKresponse. - You can also check out the HttpResponse docs to see how to add a header to the response.

Can you do it?

Solution

Solution

- Remove the previous endpoints.

- Create a new endpoint with the route

/healthand the methodGET.

#![allow(unused)] fn main() { use actix_web::{get, HttpResponse}; #[get("/health")] async fn health() -> HttpResponse { HttpResponse::Ok() .append_header(("version", "0.0.1")) .finish() } }

- Configure the services in your shuttle crate. Remove the previous services and add the new one.

Your api > shuttle > src > main.rs file should look like this:

#![allow(unused)] fn main() { use actix_web::web::ServiceConfig; use api_lib::health::health; use shuttle_actix_web::ShuttleActixWeb; use shuttle_runtime::CustomError; use sqlx::Executor; #[shuttle_runtime::main] async fn actix_web( #[shuttle_shared_db::Postgres] pool: sqlx::PgPool, ) -> ShuttleActixWeb<impl FnOnce(&mut ServiceConfig) + Send + Clone + 'static> { // initialize the database if not already initialized pool.execute(include_str!("../../db/schema.sql")) .await .map_err(CustomError::new)?; let pool = actix_web::web::Data::new(pool); let config = move |cfg: &mut ServiceConfig| { cfg.app_data(pool).service(health); }; Ok(config.into()) } }

Test that everything is working by running the following command:

curl -i http://localhost:8000/health

You should get something like this:

HTTP/1.1 200 OK

content-length: 0

version: v0.0.1

date: Sun, 02 Jul 2023 10:35:15 GMT

Commit your changes.

git add .

git commit -m "add health check endpoint"

Using the configure method

You may have noticed that when our health.rs file contained two different endpoints, we had to add them as a service to the ServiceConfig in the actix_web function. This is not a problem when we have a few endpoints, but it can become a problem when we have many endpoints.

In order to make our code cleaner, we can use the configure function.

Take a look at the Actix Web docs to see how to use it.

Indeed, if you take a look at the code we have in our shuttle crate, you will see that we are already using it:

#![allow(unused)] fn main() { let config = move |cfg: &mut ServiceConfig| { cfg.app_data(pool).service(health); }; }

You could change this code to this and it should work the same:

#![allow(unused)] fn main() { let config = move |cfg: &mut ServiceConfig| { cfg.app_data(pool).configure(|c| { c.service(health); }); }; }

Try it out!

Refactoring our code

Let's refactor our code to use the configure method both in the health.rs file and in the main.rs file.

In the main.rs file, we will be expecting to receive a configure function from the health module, so we will change the code to this:

#![allow(unused)] fn main() { let config = move |cfg: &mut ServiceConfig| { cfg.app_data(pool).configure(api_lib::health::service); }; }

Note that it won't compile, because we haven't changed the health.rs file yet.

So, in the health.rs file, we need to export a function called service that receives a ServiceConfig and returns nothing.

#![allow(unused)] fn main() { // add the use statement for ServiceConfig use actix_web::{get, web::ServiceConfig, HttpResponse}; pub fn service(cfg: &mut ServiceConfig) { cfg.service(health); } }

Now, we can run the code and it should work the same as before.

There are, though, a few things that we can change.

Not sure if you notice it but we required the pub keyword to be in front of the service function. This is because we are calling the function from another module. If we were calling it from the same module, we wouldn't need the pub keyword.

But then, how is that we didn't need that for the health function as well? Well, that's because we are using the #[get("/health")] macro, which automatically adds the pub keyword to the function.

Let's opt out of using macros and do it manually.

We will leverage the route method of the ServiceConfig struct. Check out the docs.

#![allow(unused)] fn main() { use actix_web::{ web::{self, ServiceConfig}, HttpResponse, }; pub fn service(cfg: &mut ServiceConfig) { cfg.route("/health", web::get().to(health)); } async fn health() -> HttpResponse { HttpResponse::Ok() .append_header(("version", "v0.0.1")) .finish() } }

Everything should still work. Check it out and commit your changes.

git add .

git commit -m "use configure"

Unit and Integration tests

Although testing is a little bit out of the scope of this workshop, we thought it would be interesting to show you how to write tests for your API.

These will be simple examples of how to test the health endpoint.

For more information about testing in Actix Web, please refer to the Actix Web documentation.

For more information about testing in Rust, please refer to the Rust Book.

Unit tests

Unit tests are usually created in the same file containin the subject under test. In our case, we will create a unit test for the health endpoint in the api > lib > src > health.rs file.

The common practice is to create a new module called tests. But before that, we will need to add a dev-dependency to the Cargo.toml file of our library:

[dev-dependencies]

actix-rt = "2.0.0"

Now, let's add this to our health.rs file:

#![allow(unused)] fn main() { #[cfg(test)] mod tests { use actix_web::http::StatusCode; use super::*; #[actix_rt::test] async fn health_check_works() { let res = health().await; assert!(res.status().is_success()); assert_eq!(res.status(), StatusCode::OK); let data = res .headers() .get("health-check") .and_then(|h| h.to_str().ok()); assert_eq!(data, Some("v0.0.1")); } } }

A few things to note here:

- The

#[cfg(test)]annotation tells the compiler to only compile the code in this module if we are running tests. - The

#[actix_rt::test]annotation tells the compiler to run this test in theActixruntime (giving you async support). - The

use super::*;statement imports all the items from the parent module even if they're not public (in this case, thehealthfunction).

Running the tests

To run the tests, you can use the following command:

cargo test

# or, if you prefer to test in watch mode:

cargo watch -x test

We introduced a bug in our test. Can you fix it?

Solution

Solution

The name of the header is version, not health-check. So, the either we change the name of the header in the test or we change the name of the header in the health function. Your call ;D.

Can you extract the version to a constant so we can reuse it in the test?

Solution

Solution

Declare the constant in the health.rs file and then use it in the health function and in the test:

#![allow(unused)] fn main() { const API_VERSION: &str = "v0.0.1"; }

Integration tests

Next, we're going to create an integration test for the health endpoint. This test will run the whole application and make a request to the health endpoint.

The convention is to have a tests folder in the root of the crate under test.

Let's create this folder and add a new file called health.rs in it. The path of the file should be api > lib > tests > health.rs.

Copy this content into it:

#![allow(unused)] fn main() { use actix_web::{http::StatusCode, App}; use api_lib::health::{service, API_VERSION}; #[actix_rt::test] async fn health_check_works() { let app = App::new().configure(service); let mut app = actix_web::test::init_service(app).await; let req = actix_web::test::TestRequest::get() .uri("/health") .to_request(); let res = actix_web::test::call_service(&mut app, req).await; assert!(res.status().is_success()); assert_eq!(res.status(), StatusCode::OK); let data = res.headers().get("version").and_then(|h| h.to_str().ok()); assert_eq!(data, Some(API_VERSION)); } }

This code will fail, can you figure out why?

Solution

Solution

We need to make the API_VERSION constant public so we can use it in the test. To do that, we need to add the pub keyword to the constant declaration

For more information about Integration Tests check the links we provided above in the beginning of this section:

Don't forget to commit your changes:

git add .

git commit -m "add unit and integration tests"

Films endpoints

We are going to build now the endpoints needed to manage films.

For now, don't worry about the implementation details, we will cover them in the next chapter. We will return a 200 OK response for all the endpoints.

We're going to provide the following endpoints:

GET /v1/films: returns a list of films.GET /v1/films/{id}: returns a film by id.POST /v1/films: creates a new film.PUT /v1/films: updates a film.DELETE /v1/films/{id}: deletes a film by id.

Creating the films module

Let's start by creating the films module in a similar way we did with the health module.

Create a new file called films.rs in the api > lib> src folder and declare the module in the lib.rs file:

#![allow(unused)] fn main() { pub mod films; }

Now, let's create a new function called service in the films module which will be responsible of declaring all the routes for the films endpoints. Make it public. You can base all this code in the health module.

Can you guess how to create all the endpoints?

Take a look at the actix_web::Scope documentation to learn how to share a common path prefix for all the routes in the scope.

Solution

Solution

#![allow(unused)] fn main() { use actix_web::{ web::{self, ServiceConfig}, HttpResponse, }; pub fn service(cfg: &mut ServiceConfig) { cfg.service( web::scope("/v1/films") // get all films .route("", web::get().to(get_all)) // get by id .route("/{film_id}", web::get().to(get)) // post new film .route("", web::post().to(post)) // update film .route("", web::put().to(put)) // delete film .route("/{film_id}", web::delete().to(delete)), ); } async fn get_all() -> HttpResponse { HttpResponse::Ok().finish() } async fn get() -> HttpResponse { HttpResponse::Ok().finish() } async fn post() -> HttpResponse { HttpResponse::Ok().finish() } async fn put() -> HttpResponse { HttpResponse::Ok().finish() } async fn delete() -> HttpResponse { HttpResponse::Ok().finish() } }

Serving the films endpoints

In order to expose these newly created endpoints we need to configure the service in our shuttle crate.

Open the main.rs file in the api > shuttle > src folder and add a new service:

- cfg.app_data(pool).configure(api_lib::health::service);

+ cfg.app_data(pool)

+ .configure(api_lib::health::service)

+ .configure(api_lib::films::service);

Compile the code and check that everything works as expected.

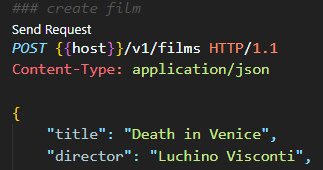

You can use curl or Postman to test the new endpoints.

Alternatively, if you have installed the REST Client extension for Visual Studio Code, you can create a file called api.http in the root of the project and copy the following content:

@host = http://localhost:8000

@film_id = 6f05e5f2-133c-11ee-be9f-0ab7e0d8c876

### health

GET {{host}}/health HTTP/1.1

### create film

POST {{host}}/v1/films HTTP/1.1

Content-Type: application/json

{

"title": "Death in Venice",

"director": "Luchino Visconti",

"year": 1971,

"poster": "https://th.bing.com/th/id/R.0d441f68f2182fd7c129f4e79f6a66ef?rik=h0j7Ecvt7NBYrg&pid=ImgRaw&r=0"

}

### update film

PUT {{host}}/v1/films HTTP/1.1

Content-Type: application/json

{

"id": "{{film_id}}",

"title": "Death in Venice",

"director": "Benjamin Britten",

"year": 1981,

"poster": "https://image.tmdb.org/t/p/original//tmT12hTzJorZxd9M8YJOQOJCqsP.jpg"

}

### get all films

GET {{host}}/v1/films HTTP/1.1

### get film

GET {{host}}/v1/films/{{film_id}} HTTP/1.1

### get bad film

GET {{host}}/v1/films/356e42a8-e659-406f-98 HTTP/1.1

### delete film

DELETE {{host}}/v1/films/{{film_id}} HTTP/1.1

Open it and just click on the Send Request link next to each request to send it to the server.

Commit your changes:

git add .

git commit -m "feat: add films endpoints"

Models

So now we have the films endpoints working, but they don't really do anything nor return any data.

In order to return data we need to create a model for our films.

As we want to share the model between the api and the frontend crates we will use the shared crate for this.

The shared crate is a library crate. This means that it can be used by other crates in the workspace.

Let's import the dependency in the Cargo.toml file of our api-lib crate:

[dependencies]

+ # shared

+ shared = { path = "../../shared" }

Verify that the project is still compiling.

Creating the Film model

We are going to create a new module called models in the shared crate.

Create a new file called models.rs in the shared > src folder and add the following code:

#![allow(unused)] fn main() { pub struct Film { pub id: uuid::Uuid, // we will be using uuids as ids pub title: String, pub director: String, pub year: u16, // only positive numbers pub poster: String, // we will use the url of the poster here pub created_at: Option<chrono::DateTime<chrono::Utc>>, pub updated_at: Option<chrono::DateTime<chrono::Utc>>, } }

We could make it more complicated but for the sake of simplicity we will just use a struct with a small amount of fields.

Now, remove everything from the lib.rs file in the shared crate and add the following code:

#![allow(unused)] fn main() { pub mod models; }

Soon you will notice that the compiler will complain about the chrono and uuid dependencies.

Let's add them:

[dependencies]

+ uuid = { version = "1.3.4", features = ["serde", "v4", "js"] }

+ chrono = { version = "0.4", features = ["serde"] }

Most of the features you see are related to the fact that we want our API to be able to serialize and deserialize the models to and from JSON.

Compile the code and check that everything is fine.

Creating a model for the post endpoint

In our POST endpoint we will receive a JSON object with the following structure:

{

"title": "The Lord of the Rings: The Fellowship of the Ring",

"director": "Peter Jackson",

"year": 2001,

"poster": "https://www.imdb.com/title/tt0120737/mediaviewer/rm1340569600/",

}

We don't need to pass the id or the created_at and updated_at fields as they will be generated by the API, so let's create a new model for that.

#![allow(unused)] fn main() { pub struct CreateFilm { pub title: String, pub director: String, pub year: u16, pub poster: String, } }

Compile again just in case and commit your changes:

git add .

git commit -m "add models"

Serde

Serde is a framework for serializing and deserializing Rust data structures efficiently and generically.

We are going to use it to add serialization and deserialization support to our models.

Adding the dependency

Let's add the serde dependency to the Cargo.toml file of the shared crate:

[dependencies]

+ serde = { version = "1.0", features = ["derive"] }

Adding the derive feature will allow us to use the #[derive(Serialize, Deserialize)] macro on our models, which will automatically implement the Serialize and Deserialize traits for us.

As we will be working with JSON in our API, we need to bring in the serde_json crate as well in the Cargo.toml file of the api-lib crate:

[dependencies]

+ # serde

+ serde = "1.0"

+ serde_json = "1.0"

Adding the Serialize and Deserialize traits to our models

Let's add the Serialize and Deserialize traits to our Film and CreateFilm models.

For that, we are going to use the derive macro:

+ use serde::{Deserialize, Serialize};

+ #[derive(Serialize, Deserialize, Debug, Clone, PartialEq, Eq, PartialOrd, Ord, Default)]

pub struct Film {

pub id: uuid::Uuid, // we will be using uuids as ids

pub title: String,

pub director: String,

pub year: u16, // only positive numbers

pub poster: String, // we will use the url of the poster here

pub created_at: Option<chrono::DateTime<chrono::Utc>>,

pub updated_at: Option<chrono::DateTime<chrono::Utc>>,

}

+ #[derive(Serialize, Deserialize, Debug, Clone, PartialEq, Eq, PartialOrd, Ord, Default)]

pub struct CreateFilm {

pub title: String,

pub director: String,

pub year: u16,

pub poster: String,

}

Note that we added more traits. It's a common practice for libraries to implement some of those traits to avoid issues when using them. See the orphan rule for more information.

Commit your changes:

git add .

git commit -m "add serde dependency and derive traits"

Film Repository

Today, our API will work with a Postgres database. But this may change in the future.

Even if that never happens (which is the most probable thing) we will still want to decouple our API from the database to make it easier to test and maintain.

To do that, we will leverage traits to define the behavior of our film repository.

This will also allow us to take a look at:

Defining the FilmRepository trait

We will define this trait in the api-lib crate although it could be its own crate if we wanted.

To keep it simple create a new film_repository folder in api > lib > src and add a mod.rs file with the following content:

#![allow(unused)] fn main() { pub type FilmError = String; pub type FilmResult<T> = Result<T, FilmError>; pub trait FilmRepository: Send + Sync + 'static { async fn get_films(&self) -> FilmResult<Vec<Film>>; async fn get_film(&self, id: &Uuid) -> FilmResult<Film>; async fn create_film(&self, id: &CreateFilm) -> FilmResult<Film>; async fn update_film(&self, id: &Film) -> FilmResult<Film>; async fn delete_film(&self, id: &Uuid) -> FilmResult<Uuid>; } }

Don't forget to add the module to the lib.rs file:

#![allow(unused)] fn main() { pub mod film_repository; }

The code won't compile. But don't worry, we will fix that in a minute.

Let's review for a moment that piece of code:

- We define two type aliases:

FilmErrorandFilmResult<T>. This will allow us to easily change theerrortype if we need to and to avoid boilerplate when having to write the return of our functions. - The Send & Sync traits will allow us to share and send this the types implementiong this trait between threads.

- The

'staticlifetime will make our life easier as we know that the repository will live for the entire duration of the program. - Finally, you see that we have defined 5 functions that will allow us to interact with our database. We will implement them in the next section.

Then, why does this code not compile?

The reason is that we are using the async keyword in our trait definition. This is not allowed by the Rust compiler.

To fix this, we will use the async-trait crate.

async-trait

Let's bring this dependency into our api-lib crate by adding it to the Cargo.toml file. As we will be using the uuid crate in our repository, we will also add it to the Cargo.toml file:

[dependencies]

+ # utils

+ async-trait = "0.1.82"

+ uuid = { version = "1.3.4", features = ["serde", "v4", "js"] }

Now, let's mark our trait as async and add all the use statements we need:

+ use shared::models::{CreateFilm, Film};

+ use uuid::Uuid;

pub type FilmError = String;

pub type FilmResult<T> = Result<T, FilmError>;

+ #[async_trait::async_trait]

pub trait FilmRepository: Send + Sync + 'static {

async fn get_films(&self) -> FilmResult<Vec<Film>>;

async fn get_film(&self, id: &Uuid) -> FilmResult<Film>;

async fn create_film(&self, id: &CreateFilm) -> FilmResult<Film>;

async fn update_film(&self, id: &Film) -> FilmResult<Film>;

async fn delete_film(&self, id: &Uuid) -> FilmResult<Uuid>;

}

Now, the code compiles. But we still need to implement the trait. We will do it in the next section.

mod.rs

You probably noticed that we created file called mod.rs in the film_repository folder.

So far, whenever we wanted to create a new module, we just used a file with the same name as the module. For example, we created a film module by creating a film.rs file.

There are several ways to work with modules, you can learn more about it here.

This is the old way of doing things with modules but it's still valid and widely used in the Rust community.

Most of the time, you will do this if you plan to add more modules under the film_repository folder. For example, you could add a memory_film_repository module to implement a memory repository.

For now, let's commit our changes:

git add .

git commit -m "add film repository trait"

Implementing the FilmRepository trait

Cool, let's create a new file called postgres_film_repository.rs in the film_repository folder and add the new module to the mod.rs file in the same folder. This time don't use the pub keyword when declaring the module.

The idea is that we will re-export the implementation as if it was coming from the film_repository module. This way, we can hide the implementation details from the rest of the application.

#![allow(unused)] fn main() { mod postgres_film_repository; }

Implementation

Let's open the recently created postgres_film_repository.rs file and add the following code:

#![allow(unused)] fn main() { pub struct PostgresFilmRepository { pool: sqlx::PgPool, } }

Note that this is a simple struct that holds a sqlx::PgPool instance. This is the connection pool we will use to connect to the database.

We don't need to expose it, hence the pub keyword is not used.

Now, let's add a new associated function to the struct that will make us easier to create new instances of this struct:

#![allow(unused)] fn main() { impl PostgresFilmRepository { pub fn new(pool: sqlx::PgPool) -> Self { Self { pool } } } }

This sort of constructor pattern is very common in Rust and the convention is to use new as the name of the associated function.

Next, let's implement the FilmRepository trait for this struct:

#![allow(unused)] fn main() { #[async_trait::async_trait] impl FilmRepository for PostgresFilmRepository { async fn get_films(&self) -> FilmResult<Vec<Film>> { sqlx::query_as::<_, Film>( r#" SELECT id, title, director, year, poster, created_at, updated_at FROM films "#, ) .fetch_all(&self.pool) .await .map_err(|e| e.to_string()) } async fn get_film(&self, film_id: &uuid::Uuid) -> FilmResult<Film> { sqlx::query_as::<_, Film>( r#" SELECT id, title, director, year, poster, created_at, updated_at FROM films WHERE id = $1 "#, ) .bind(film_id) .fetch_one(&self.pool) .await .map_err(|e| e.to_string()) } async fn create_film(&self, create_film: &CreateFilm) -> FilmResult<Film> { sqlx::query_as::<_, Film>( r#" INSERT INTO films (title, director, year, poster) VALUES ($1, $2, $3, $4) RETURNING id, title, director, year, poster, created_at, updated_at "#, ) .bind(&create_film.title) .bind(&create_film.director) .bind(create_film.year as i16) .bind(&create_film.poster) .fetch_one(&self.pool) .await .map_err(|e| e.to_string()) } async fn update_film(&self, film: &Film) -> FilmResult<Film> { sqlx::query_as::<_, Film>( r#" UPDATE films SET title = $2, director = $3, year = $4, poster = $5 WHERE id = $1 RETURNING id, title, director, year, poster, created_at, updated_at "#, ) .bind(film.id) .bind(&film.title) .bind(&film.director) .bind(film.year as i16) .bind(&film.poster) .fetch_one(&self.pool) .await .map_err(|e| e.to_string()) } async fn delete_film(&self, film_id: &uuid::Uuid) -> FilmResult<uuid::Uuid> { sqlx::query_scalar::<_, uuid::Uuid>( r#" DELETE FROM films WHERE id = $1 RETURNING id "#, ) .bind(film_id) .fetch_one(&self.pool) .await .map_err(|e| e.to_string()) } } }

Don't forget to add the necessary imports:

#![allow(unused)] fn main() { use super::{FilmRepository, FilmResult}; use shared::models::{CreateFilm, Film}; }

Note that this code won't compile. Don't worry for the moment, we will fix it in a moment.

Take the time to review the code. Unfortunately, going deep into the details of SQLx is out of the scope of this tutorial. However, if you are interested in learning more about it, you can check the SQLx documentation.

Fixing the compilation error

If you check the compiler error you will see that it is complaining about the Film struct. It is telling us that it doesn't implement the FromRow trait.

This is because we are using the query_as method from SQLx, which requires that the struct implements the FromRow trait.

4 | pub struct Film {

| --------------- doesn't satisfy `Film: FromRow<'r, PgRow>`

|

= note: the following trait bounds were not satisfied:

`Film: FromRow<'r, PgRow>`

Let's fix this by implementing the FromRow trait for the Film struct.

We must do this in the shared crate, because the Film struct is defined there.

Add the SQLx dependency to the Cargo.toml file in the shared crate:

# database

sqlx = { version = "0.7", default-features = false, features = [

"tls-native-tls",

"macros",

"postgres",

"uuid",

"chrono",

"json",

] }

And then add the sqlx::FromRow trait into the derive attribute of the Film and CreateFilm structs.

Now we will hit another compiler error. FromRow doesn't work with u16.

Let's add a new annotation to the year field in both structs:

#![allow(unused)] fn main() { #[sqlx(try_from = "i16")] }

This is how the models.rs file should look like

This is how the models.rs file should look like

#![allow(unused)] fn main() { use serde::{Deserialize, Serialize}; #[derive( Serialize, Deserialize, Debug, Clone, PartialEq, Eq, PartialOrd, Ord, Default, sqlx::FromRow, )] pub struct Film { pub id: uuid::Uuid, // we will be using uuids as ids pub title: String, pub director: String, #[sqlx(try_from = "i16")] pub year: u16, // only positive numbers pub poster: String, // we will use the url of the poster here pub created_at: Option<chrono::DateTime<chrono::Utc>>, pub updated_at: Option<chrono::DateTime<chrono::Utc>>, } #[derive( Serialize, Deserialize, Debug, Clone, PartialEq, Eq, PartialOrd, Ord, Default, sqlx::FromRow, )] pub struct CreateFilm { pub title: String, pub director: String, #[sqlx(try_from = "i16")] pub year: u16, pub poster: String, } }

Supporting WebAssmembly

We are almost done. The code is compiling but we still need to do some changes to make this shared crate work in the browser.

Our frontend will be compiled to WebAssembly, so we need to make sure that the shared crate can be compiled to WebAssembly.

The problem that we will face is that SQLx doesn't support WebAssembly yet.

So, how to solve this? Enter Cargo Features.

We will compile certain parts of the code only when a certain feature is enabled.

Note that this is how tests works. If you remember when we looked at that, each testing module is preceded by #[cfg(test)] annotation. This means that the code inside that module will only be compiled when the test feature is enabled.

Adding the backend feature

The idea is that we will only use the FromRow trait when the backend feature is enabled.

This should be true for all the backend code (the api-lib crate) but not for the frontend code.

Let's add the backend feature to the Cargo.toml file in the shared crate:

[features]

backend = ["sqlx"]

Then modify the sqlx dependency to make it optional:

sqlx = { version = "0.6.3", default-features = false, features = [ "runtime-actix-native-tls", "macros", "postgres", "uuid", "chrono", "json" ], optional = true }

That's it. As the SQLx dependency is now optional, it will only be used in case the backend feature is enabled.

Using the sqlx feature

Modify the models.rs file in the shared crate to look like this:

#![allow(unused)] fn main() { use serde::{Deserialize, Serialize}; #[cfg_attr(feature = "backend", derive(sqlx::FromRow))] #[derive(Serialize, Deserialize, Debug, Clone, PartialEq, Eq, PartialOrd, Ord, Default)] pub struct Film { pub id: uuid::Uuid, pub title: String, pub director: String, #[cfg_attr(feature = "backend", sqlx(try_from = "i16"))] pub year: u16, pub poster: String, pub created_at: Option<chrono::DateTime<chrono::Utc>>, pub updated_at: Option<chrono::DateTime<chrono::Utc>>, } #[cfg_attr(feature = "backend", derive(sqlx::FromRow))] #[derive(Serialize, Deserialize, Debug, Clone, PartialEq, Eq, PartialOrd, Ord, Default)] pub struct CreateFilm { pub title: String, pub director: String, #[cfg_attr(feature = "backend", sqlx(try_from = "i16"))] pub year: u16, pub poster: String, } }

But... the code doesn't compile!

Sure, no problem. We need to add the backend feature to the Cargo.toml file in the api-lib crate:

# shared

- shared = { path = "../../shared" }

+ shared = { path = "../../shared", features = ["backend"] }

We should be good by now but there's still a small detail to cover.

We want our PostgresFilmRepository struct to be available so we need expose it.

Head to the mod.rs file in api > lib > src > film_repository and add the following line:

#![allow(unused)] fn main() { pub use postgres_film_repository::PostgresFilmRepository; }

Try to build a new module called memory_film_repository that implements the FilmRepository trait and uses an in-memory data structure to store the films.

You can also add tests to your implementation.

HINT: You can take a look at the workshop GitHub repository if you get stuck.

Plenty of work in this section. Check that everything compiles and commit your changes:

git add .

git commit -m "add postgres film repository"

Injecting the repository

Ok, so now we have our shared library working both for the frontend and the backend. We have our FilmRepository trait and even a Postgres implementation of it. Now we need to inject the repository into our handlers.

If you take a look again at the main.rs file of our api-shuttle crate, you will see that we were already sharing the sqlx::PgPool between the handlers.

We will do the same with the FilmRepository trait.

Creating a PostgresFilmRepository struct

Let's create a new instance of the PostgresFilmRepository struct in the main.rs file of our api-shuttle crate:

- let pool = actix_web::web::Data::new(pool);

+ let film_repository = api_lib::film_repository::PostgresFilmRepository::new(pool);

+ let film_repository = actix_web::web::Data::new(film_repository);

- cfg.app_data(pool)

+ cfg.app_data(film_repository)

Once you apply this change, everything should compile and work as before.

Commit your changes:

git add .

git commit -m "inject film repository"

Implementing the endpoints

In this section we are going to implement all the film endpoints.

One thing we know for sure is that all our handlers will need to access to a FilmRepository instance to do their work.

We already injected a particular implementation of the FilmRepository trait in our api-shuttle crate, but remember that here, we don't know which particular implementation we are going to use.

Indeed, we shouldn't care about the implementation details of the FilmRepository trait in our api-lib crate. We should only care about the fact that we have a FilmRepository trait that we can use to interact with the database.

So, it seems clear that we need to get access to the FilmRepository instance in our handlers. But how can we do that?

Refresh your memory by reading about how to handle State in Actix Web in the official documentation.

As you can see, it should be pretty straightforward isn't it? But, wait a minute. We have a problem here.

In all these examples, in order to extract a particular state we need to know its type. But we said we don't care about the particular type of the FilmRepository instance, we only care about the fact that we have a FilmRepository instance.

How can we reconcile these two things?

We have 2 options here.

We're going to cover them both briefly as this is out of the scope of the workshop.

Dynamic dispatch

The first option is to use dynamic dispatch.

This will generally make our code less performant (some times it doesn't really matter) but it will allow us to easily abstract away the particular trait implementations.

Learn more about this topic in the official Rust book.

The basic idea here is that we will use a Box<dyn FilmRepository> as our state type. This will allow us to store any type that implements the FilmRepository trait in our state.

- let film_repository = actix_web::web::Data::new(film_repository);

+ let film_repository: actix_web::web::Data<Box<dyn api_lib::film_repository::FilmRepository>> =

+ actix_web::web::Data::new(Box::new(film_repository));

Then, in our handlers, we will add this parameter:

#![allow(unused)] fn main() { repo: actix_web::web::Data<Box<dyn crate::film_repository::FilmRepository>> }

For instance, in our get_all handler, we would use it like this:

#![allow(unused)] fn main() { match repo.get_films().await { Ok(films) => HttpResponse::Ok().json(films), Err(e) => HttpResponse::NotFound().body(format!("Internal server error: {:?}", e)), } }

If you test that endpoint, you will see that it works as expected.

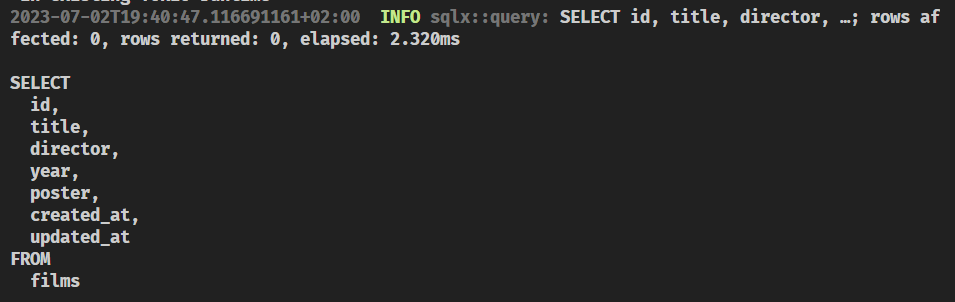

If you look into your terminal, you should be able to see the SQL query that was executed:

This is fairly easy, it works, and it's a common option.

Let's implement all the endpoints with this approach and then we'll see the second option.

Implementing the endpoints

Do you want to give it a try?

Solution

Solution

Make sure your code in the api-lib/src/film.rs file looks like this:

#![allow(unused)] fn main() { use actix_web::{ web::{self, ServiceConfig}, HttpResponse, }; use shared::models::{CreateFilm, Film}; use uuid::Uuid; use crate::film_repository::FilmRepository; type Repository = web::Data<Box<dyn FilmRepository>>; pub fn service(cfg: &mut ServiceConfig) { cfg.service( web::scope("/v1/films") // get all films .route("", web::get().to(get_all)) // get by id .route("/{film_id}", web::get().to(get)) // post new film .route("", web::post().to(post)) // update film .route("", web::put().to(put)) // delete film .route("/{film_id}", web::delete().to(delete)), ); } async fn get_all(repo: Repository) -> HttpResponse { match repo.get_films().await { Ok(films) => HttpResponse::Ok().json(films), Err(e) => HttpResponse::NotFound().body(format!("Internal server error: {:?}", e)), } } async fn get(film_id: web::Path<Uuid>, repo: Repository) -> HttpResponse { match repo.get_film(&film_id).await { Ok(film) => HttpResponse::Ok().json(film), Err(_) => HttpResponse::NotFound().body("Not found"), } } async fn post(create_film: web::Json<CreateFilm>, repo: Repository) -> HttpResponse { match repo.create_film(&create_film).await { Ok(film) => HttpResponse::Ok().json(film), Err(e) => { HttpResponse::InternalServerError().body(format!("Internal server error: {:?}", e)) } } } async fn put(film: web::Json<Film>, repo: Repository) -> HttpResponse { match repo.update_film(&film).await { Ok(film) => HttpResponse::Ok().json(film), Err(e) => HttpResponse::NotFound().body(format!("Internal server error: {:?}", e)), } } async fn delete(film_id: web::Path<Uuid>, repo: Repository) -> HttpResponse { match repo.delete_film(&film_id).await { Ok(film) => HttpResponse::Ok().json(film), Err(e) => { HttpResponse::InternalServerError().body(format!("Internal server error: {:?}", e)) } } } }

Test the API by using the api.http file if you created it in one of the previous sections or by using any other tool.

Commit your changes:

git add .

git commit -m "implement film endpoints"

Static dispatch

You can check out this section of the Rust Book to understand about some of the trade-offs of using dynamic dispatch.

We're going to learn in this section how to use Generics to leverage static dispatch.

Refactor the film endpoints

Let's change all the code to use generics instead of trait objects:

#![allow(unused)] fn main() { use actix_web::{ web::{self, ServiceConfig}, HttpResponse, }; use shared::models::{CreateFilm, Film}; use uuid::Uuid; use crate::film_repository::FilmRepository; pub fn service<R: FilmRepository>(cfg: &mut ServiceConfig) { cfg.service( web::scope("/v1/films") // get all films .route("", web::get().to(get_all::<R>)) // get by id .route("/{film_id}", web::get().to(get::<R>)) // post new film .route("", web::post().to(post::<R>)) // update film .route("", web::put().to(put::<R>)) // delete film .route("/{film_id}", web::delete().to(delete::<R>)), ); } async fn get_all<R: FilmRepository>(repo: web::Data<R>) -> HttpResponse { match repo.get_films().await { Ok(films) => HttpResponse::Ok().json(films), Err(e) => HttpResponse::NotFound().body(format!("Internal server error: {:?}", e)), } } async fn get<R: FilmRepository>(film_id: web::Path<Uuid>, repo: web::Data<R>) -> HttpResponse { match repo.get_film(&film_id).await { Ok(film) => HttpResponse::Ok().json(film), Err(_) => HttpResponse::NotFound().body("Not found"), } } async fn post<R: FilmRepository>( create_film: web::Json<CreateFilm>, repo: web::Data<R>, ) -> HttpResponse { match repo.create_film(&create_film).await { Ok(film) => HttpResponse::Ok().json(film), Err(e) => { HttpResponse::InternalServerError().body(format!("Internal server error: {:?}", e)) } } } async fn put<R: FilmRepository>(film: web::Json<Film>, repo: web::Data<R>) -> HttpResponse { match repo.update_film(&film).await { Ok(film) => HttpResponse::Ok().json(film), Err(e) => HttpResponse::NotFound().body(format!("Internal server error: {:?}", e)), } } async fn delete<R: FilmRepository>(film_id: web::Path<Uuid>, repo: web::Data<R>) -> HttpResponse { match repo.delete_film(&film_id).await { Ok(film) => HttpResponse::Ok().json(film), Err(e) => { HttpResponse::InternalServerError().body(format!("Internal server error: {:?}", e)) } } } }

Hinting the compiler

If you try to compile the code, you'll get an error:

error[E0282]: type annotations needed

--> api/shuttle/src/main.rs:22:24

|

22 | .configure(api_lib::films::service);

| ^^^^^^^^^^^^^^^^^^^^^^^ cannot infer type of the type parameter `R` declared on the function `service`

|

help: consider specifying the generic argument

|

22 | .configure(api_lib::films::service::<R>);

| +++++

For more information about this error, try `rustc --explain E0282`.

error: could not compile `api-shuttle` (bin "api-shuttle") due to previous error

Error: Build failed. Is the Shuttle runtime missing?

[Finished running. Exit status: 1]

But the compiler is giving us a hint on how to fix it. Let's do it.

Open the main.rs file of our api-shuttle crate and let's change a couple of things:

let film_repository = api_lib::film_repository::PostgresFilmRepository::new(pool);

- let film_repository: actix_web::web::Data<Box<dyn api_lib::film_repository::FilmRepository>> =

- actix_web::web::Data::new(Box::new(film_repository));

+ let film_repository = actix_web::web::Data::new(film_repository);

let config = move |cfg: &mut ServiceConfig| {

cfg.app_data(film_repository)

.configure(api_lib::health::service)

- .configure(api_lib::films::service);

+ .configure(api_lib::films::service::<api_lib::film_repository::PostgresFilmRepository>);

};

This should be enough to make the compiler happy. Now it knows what type to use for the R generic parameter.

Check that everything works as expected and commit your changes:

git add .

git commit -m "refactor film endpoints to use generics"

Serving static files

In this section of the backend part of the workshop we'll learn how to serve static files with Actix Web and Shuttle.

The main goal here is to serve the statics files present in a folder called static.

So the API will serve statics in the root path / and the API endpoints in the /api path.

For this to happen we will need to refactor a little bit our api-shuttle main code.

Shuttle dependencies

Read the Shuttle documentation for static files.

Some of the caveats that you will find explained there will apply to us as we are using a workspace, but let's start from the beginning.

Let's add the shuttle-static-folder and the actix-files dependencies to our api-shuttle crate.

[dependencies]

# static

actix-files = "0.6.6"

Serving the static files

Now, let's refactor our main.rs file to serve the static files.

Let's modify our ServiceConfig to serve static files in the / path and the API in the /api path:

- cfg.app_data(film_repository)

- .configure(api_lib::health::service)

- .configure(api_lib::films::service::<api_lib::film_repository::PostgresFilmRepository>);

+ cfg.service(

+ web::scope("/api")

+ .app_data(film_repository)

+ .configure(api_lib::health::service)

+ .configure(

+ api_lib::films::service::<api_lib::film_repository::PostgresFilmRepo+ sitory>,

+ ),

+ )

+ .service(Files::new("/", "static").index_file("index.html"));

Final Code

Final Code

#![allow(unused)] fn main() { use actix_web::web::{self, ServiceConfig}; use shuttle_actix_web::ShuttleActixWeb; use shuttle_runtime::CustomError; use sqlx::Executor; use std::path::PathBuf; #[shuttle_runtime::main] async fn actix_web( #[shuttle_shared_db::Postgres] pool: sqlx::PgPool, #[shuttle_static_folder::StaticFolder(folder = "static")] static_folder: PathBuf, ) -> ShuttleActixWeb<impl FnOnce(&mut ServiceConfig) + Send + Clone + 'static> { // initialize the database if not already initialized pool.execute(include_str!("../../db/schema.sql")) .await .map_err(CustomError::new)?; let film_repository = api_lib::film_repository::PostgresFilmRepository::new(pool); let film_repository = web::Data::new(film_repository); let config = move |cfg: &mut ServiceConfig| { cfg.service( web::scope("/api") .app_data(film_repository) .configure(api_lib::health::service) .configure( api_lib::films::service::<api_lib::film_repository::PostgresFilmRepository>, ), ) .service(Files::new("/", "static").index_file("index.html")); }; Ok(config.into()) } }

You will get a runtime error:

[Running 'cargo shuttle run']

Building /home/roberto/GIT/github/robertohuertasm/devbcn-dry-run

Compiling api-shuttle v0.1.0 (/home/roberto/GIT/github/robertohuertasm/devbcn-dry-run/api/shuttle)

Finished dev [unoptimized + debuginfo] target(s) in 9.00s

2023-07-02T18:49:07.514534Z ERROR cargo_shuttle: failed to load your service error="Custom error: failed to provision shuttle_static_folder :: StaticFolder"

[Finished running. Exit status: 1]

That's mainly because the static folder doesn't exist yet.